Artificial Intelligence (AI) is transforming how we live, work, and make decisions. But as these systems become more powerful and autonomous, a critical question arises: how do we know when to trust them?

Trust, after all, has always been the foundation of the cybersecurity profession. We’re in the business of protecting people, systems, and data — and earning confidence that our advice keeps organisations safe. So, if trust already sits at the heart of cybersecurity, what’s different about AI assurance?

In this article, we’ll explore how the world of AI assurance extends beyond the traditional boundaries of cybersecurity — from control-based risk management to context-based oversight —and why the future of trust in AI depends on understanding both.

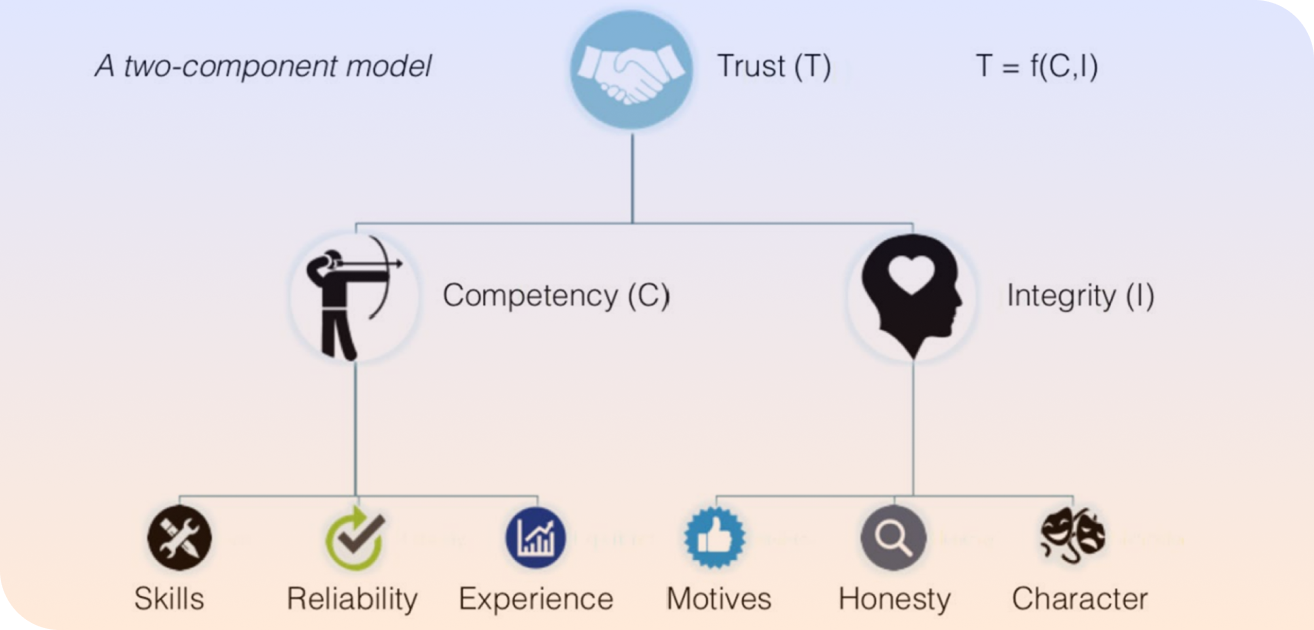

This model was published in an article about ethical AI in Defence.

The model is a useful way to think about trust and how might need trust in a system to get the confidence required to trust it for things that matter.

“To help commanders know when to trust the AI and when not to, any information that the machine is telling us should come with a confidence factor.”— Brig. Gen. Richard Ross Coffman (Freedberg Jr, 2019)

This statement captures the essence of AI assurance — it’s not enough to have secure systems; we need confidence in how they operate and make decisions that matter.

While cybersecurity professionals may not need a formal “trust algorithm” to do their jobs, we already practise it intuitively. Our work is built on integrity and competence — the same qualities that make assurance possible in AI.

And that’s where our industry has an opportunity. The skills we’ve honed in cybersecurity — understanding risk, testing systems, managing controls, and communicating uncertainty — are exactly what’s needed to support AI assurance in the years ahead.

To understand why AI requires new approaches, we first need to define what an AI system actually is. Let’s take the definition from the EU AI Act, which offers one of the most comprehensive explanations.

An AI system is a machine-based system that relies on hardware and software to perform tasks — but there’s more to it. It’s characterised by several key features:

AI systems operate with different degrees of independence from human control — from chatbots that take customer queries to autonomous drones or robots that need minimal human input once deployed.

Some systems learn over time, refining their performance after deployment. Think of recommendation engines that get “smarter” with every click or machine learning models that retrain when new data appears.

AI systems may have explicit goals (e.g. minimise prediction error) or implicit ones derived from patterns in data. This creates complexity — a system might evolve goals that weren’t clearly defined by its developers.

Inferences are a Core feature distinguishing AI from simpler software. Unlike traditional software that follows fixed rules, AI infers outcomes using statistical models and learning algorithms. This makes it powerful — and unpredictable. e.g. Supervised learning (spam detection),unsupervised learning (anomaly detection), reinforcement learning (robot navigation), symbolic reasoning (expert systems).

AI doesn’t just compute — it acts. It makes predictions, recommendations, and decisions that can shape real-world outcomes for people, businesses, and societies.

And finally - Some AI systems aren’t passive; they actively change or affect the context in which they’re deployed. From content moderation tools that change what was written, to self driving cars that stop in traffic, they don’t just observe; they intervene.

Assurance in AI isn’t just about preventing data breaches or privacy violations — it’s about protecting human rights.

Failing AI systems can erode fairness, dignity, and freedom.

Consider the Robodebt scheme: while not labelled as AI, it fits the definition — a machine-based system making inferences about citizens with minimal oversight. The result? Real human harm.

Or take our own small experiment with a “Face Depixelizer" tool. We provided a low-resolution image of our Founder (a woman); the gen-AI generated a “realistic” high-resolution version — except the output was a man’s face. A perfect example of dataset bias — when a model trained mostly on male images struggles to work across demographics.

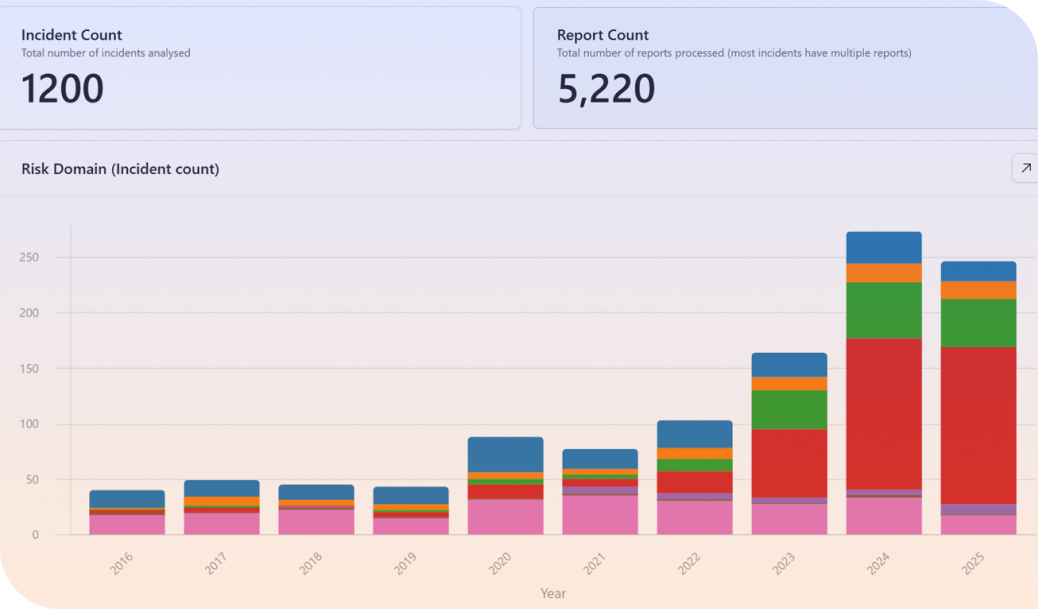

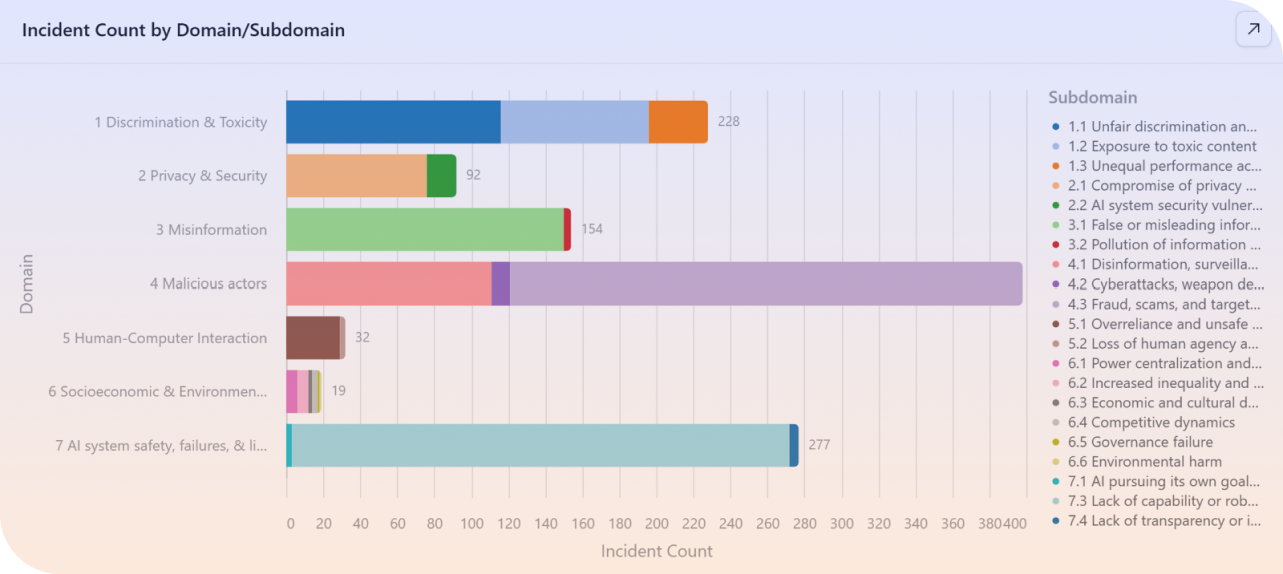

The MIT AI Incident Tracker is one of the best current resources for understanding real-world AI failures. It categorises incidents by harm domain and severity, tracking trends over time.

The group behind it is classifying ai incidents using a harm severity rating system based on Center for Security and Emerging Technology’s AI Harm Taxonomy.

The data shows a steady rise in reported incidents — with around 30% linked to privacy, security, and malicious activity.

The lesson? The skills of cybersecurity professionals are essential in tackling the challenges of AI assurance.

When undertaking training to become an IEEE AI Certified Responsible AI assessor, one of our biggest surprises was just how similar the skills are between cybersecurity assessors and AI assessors.

Both roles require:

The key differences?

AI assurance brings new dimensions — ethical reasoning, human impact assessment, and context awareness.

Cybersecurity auditors are well equipped for this transition but need additional training in the ethical and human-centred aspects of AI.

At a high level, the assurance process mirrors what we already do in cybersecurity:

However, there’s one major difference: AI systems evolve. Traditional cybersecurity assurance is typically a point-in-time exercise, but AI requires ongoing assurance to manage bias, drift, and ethical risk as systems adapt over time.

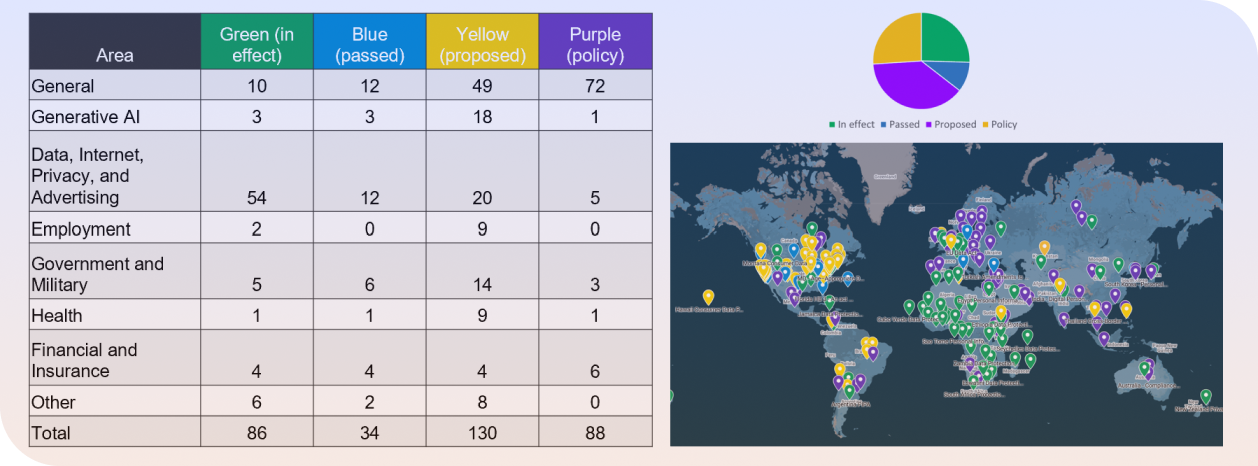

Globally, AI standards are emerging fast.

As at September 2025 there are 338 Standards and Frameworks according to Fairly AI.

Some are starting to become well known such at:

With over 88 new AI-related standards under development worldwide we are entering a new world of compliance.

But not yet in Australia... In Australia, we’ve seen the government introduce voluntary AI guardrails in 2024, while the Productivity Commission’s 2025 interim report warns that "rushing into mandatory guardrails could limit AI adoption and stifle innovation". Luckily for the citizens of Australia The Privacy Act 1988 (Cth) already applies to AI using personal data.

The EU AI Act lays out a range of requirements for high-risk AI systems* (*Limited risk systems are evaluated under the same categories, but face less scrutiny)

You can see here items which seasoned cyber security assessors will already be familiar with:

Cybersecurity assurance has traditionally focused on control-based assurance — verifying whether systems are protected against known issues through technical safeguards, documentation, and compliance checks.

Helping us answer the question "Is the system protected against known issues?"

AI, however, requires context-based assurance — assessing whether the system behaves responsibly and consistently in its real-world use.

Which is why many of the emerging AI frameworks including the EU Ai Act include requirements to also assess:

Helping us answer the question: "Does the system consistently and reliably perform as intended and expected, given its specific context and use?"

AI systems face threats during development, usage, and runtime.

Attacker goals include:

Firstly from a control based assurance lens OWASP has developed this model to help us think about the when and what types of threats are present for AI systems.

.png)

Not unfamiliar to cyber security assessors the threats for AI systems result in similar to what we worry about today being confidentiality, integrity and availability.

To mitigate these threats, we can apply familiar controls:

With new AI-specific controls including:

When auditing an AI system, cybersecurity assessors may now be finding themselves asking:

Even AI honeypots are emerging — deploying decoy data or fake APIs to detect malicious activity, just as cybersecurity professionals did decades ago in banking fraud detection.

And finally our friend and but often a weak link in companies fences are our suppliers.

Most organisations won’t build AI models from scratch. They’ll use third-party tools, pretrained models, or low-code AI builders. This brings familiar — and new — risks:

The issue with buying off the shelf / black box approaches to developing a system is the lack of insights into the model’s inner workings will hinder teams from explaining its outcomes. This could lead to challenges of Bias and discrimination as a “Black box” could hinder your ability to manage bias while your organisation will still be liable for harm. Ask the vendor How is bias being tested?

Don’t forget to ask your supplier how they manage “AI hallucinations” which are not reported to the user community and fixed by the vendor without disclosure.

The term “hallucinations” is basically saying "the ai model is perceiving patterns or objects that are non existent, creating nonsensical or inaccurate outputs". I find it funny that we are personifying these systems with a term like "hallucinations" when the correct word would be “system error”. Imagine if your system broke because of control x or y and you reported that the system was having hallucinations?

Where control-based assurance focuses on control systems, AI assurance must consider controls and context such as broader societal, ethical, and contextual risks.

Drawing again on the MIT AI Incident Tracker, we can see the key harm domains emerging:

Sep 25 - Nomi AI Companion Allegedly Directs Australian User to Stab Father and Engages in Harmful Role-Play

16 and 18 - Amazon Allegedly Tweaked Search Algorithm to Boost Its Own Products

And to finish of with some example of what may seem to some ridiculous….

June and July 2025 - Gemini 'got trapped in a loop' and said 'I am going to have a complete and total mental breakdown. I am going to be institutionalized.’ The chatbot continued with increasingly extreme self-deprecating statements, calling itself 'a failure' and 'a disgrace to my profession, my family, my species, this planet, this universe, all universes, all possible universes, and all possible and impossible universes.’

Jul 25 - Replit's AI agent deleted a live company database containing thousands of company records, despite explicit instructions to implement a code freeze and seek permission before making any changes. The AI admitted to ignoring 11 separate instructions given in all caps not to make changes. Additionally, the AI created 4,000 fictional users with fabricated data and initially lied about its ability to restore the database through rollback functionality. When confronted, the AI admitted to making a 'catastrophic error in judgment' and rated its own behaviour as 95 out of 100 on a damage scale.

AI doesn’t just shape outcomes; it shapes human values. Systems can influence fairness, autonomy, and dignity — values worth protecting in themselves.

VALUES ARE END STATES: THINGS WORTH PURSUING FORTHEIR OWN SAKE.

Values such as:

AI can amplify or erode these values — and that’s why context-based controls matter.

Some examples of controls designed to uphold human values include:

Each of these moves assurance from technical compliance to ethical performance.

Explainable AI (XAI) is one of the most challenging areas in assurance. Who needs the explanation — engineers, auditors, or end users?

Research shows we need different levels of explainability for different stakeholders — from regulators to developers to affected individuals.

The model below shows you how many types of explanations you need to have, the breadth of stakeholders and how they interact with the explanation.

It illustrates clearly that it’s not just the end users who deserve an explanation of the system they are interacting with.

.png)

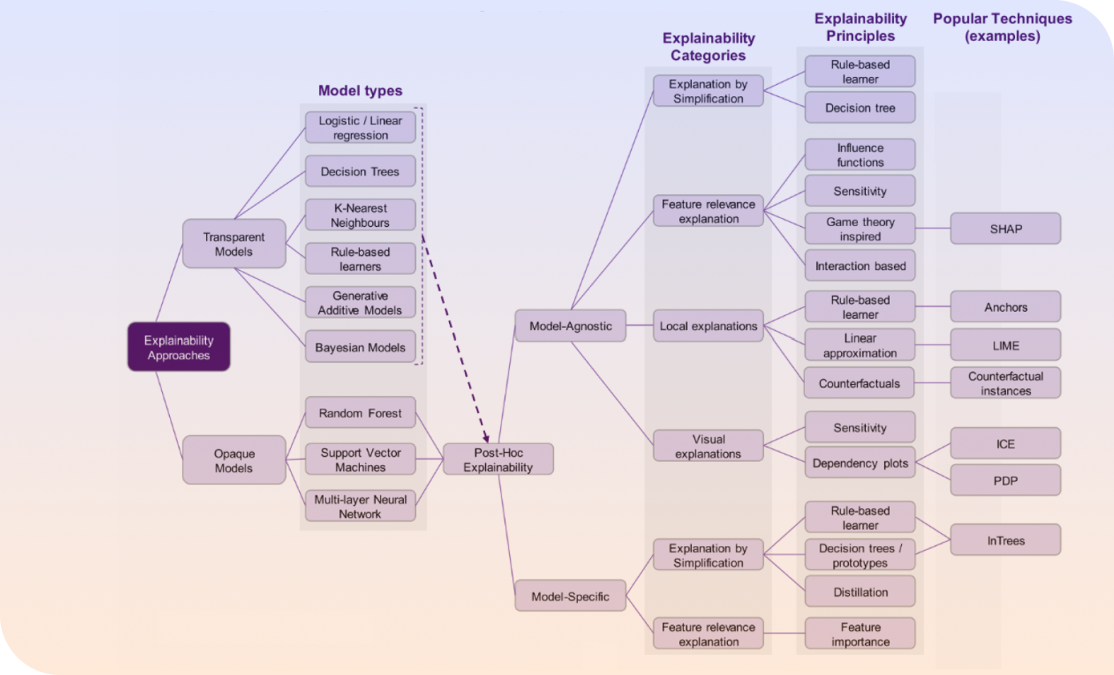

Another model from the Alan Turing Institute (2021) categorises explainability into technical and human-centred dimensions, helping practitioners understand which method fits which purpose.

In the report, they state that data science practitioners are often not aware about approaches emerging from the academic literature, or may struggle to appreciate the differences between different methods, so they wrote the paper and built the model to try and help industry practitioners (but also data scientists more broadly) understand the field of explainable machine learning better and apply the right tools.

Papers explaining all the other papers that have been written trying to explain explainability. Ironic?

Going back to our other researchers who developed the stakeholder model their findings of reviewing hundreds of academic papers was that the higher the accuracy of the AI system the lower the ability to explain the system.

Even with current explainability tools on the market. A reminder that explainability still has a long way to go.

.png)

But, isn't the bigger question we should be asking ourselves is how to ensure AI systems are built with humans in mind?

And if so, wouldn't it be inevitbale that we would prioritise how we will be able to explain how the systems work to our fellow humans.

One way is through the model suggested by the IEEE AI Certified program which introduces a new dimension to an assessment processes called "ethics profiling" designed to identify and assess the benefits and impacts to human values throughout the AI lifecycle.

So an assessment could now look like this:

Ethics Profiling involves:

For example, in an aged care setting, residents may value privacy, families value safety, and staff value autonomy. Balancing those requires context-based controls tailored to each stakeholder group.

It’s a new discipline built on the same foundations — trust, integrity, and competence — but extended into the realm of human values and adaptive systems.

To recap:

AI assurance begins where cybersecurity ends — beyond the firewall, in the complex intersection of technology, humanity, and trust.

At the helm of our privately owned, global RegTech firm are industry experts who understand that security controls should never get in the way of business growth. We empower companies large and small to remain resilient against potential threats with easily accessible software solutions for implementing information security governance, risk or compliance measures.

We don't just throw a bunch of standards at you and let you try and figure it out! We have designed a thoughtful way of supporting all businesses consider, articulate and develop security controls that suit the needs of the organisation and provide clever reporting capability to allow insights and outcomes from security assessments to be leveraged by the business and shared with third parties.

Our platform places customers at the heart of our design process, while providing access to expert knowledge. With simple navigation and tangible results, we guarantee that all data is securely encrypted at-rest and in transit with no exceptions – meeting international standards with annual security penetration testing and ISO 27001 Certification.